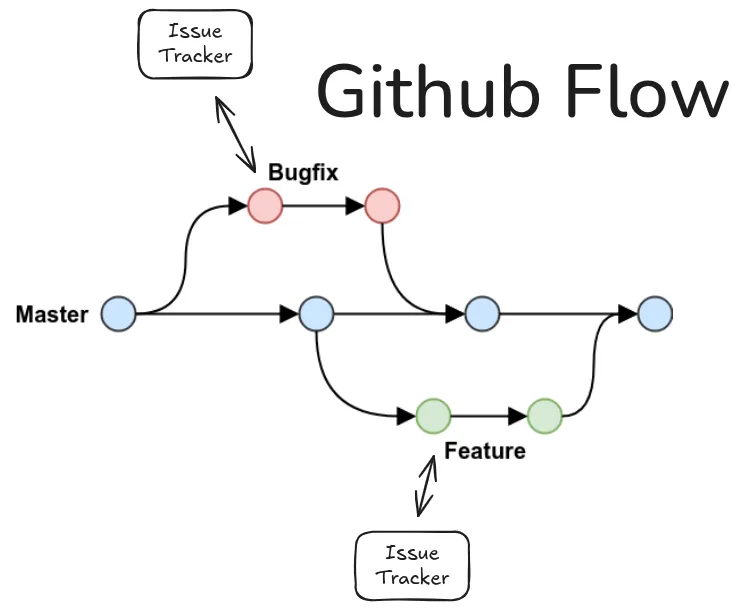

One of the common coding workflows that I have seen for a while is the Github flow. It is adapted to their product and promotes a simple, but effective way of team collaboration.

GitHub flow is a lightweight, branch-based workflow. The GitHub flow is useful for everyone, not just developers.

~ Github

Why have I been doing?: Learning a new coding workflow

This is a parallel flow…

~ me

As a trainning excercise, while I am still searching for a new job opportunity, I am using AI a lot… seriously, A LOT! And it is impossible not to try this way of coding: Agents.

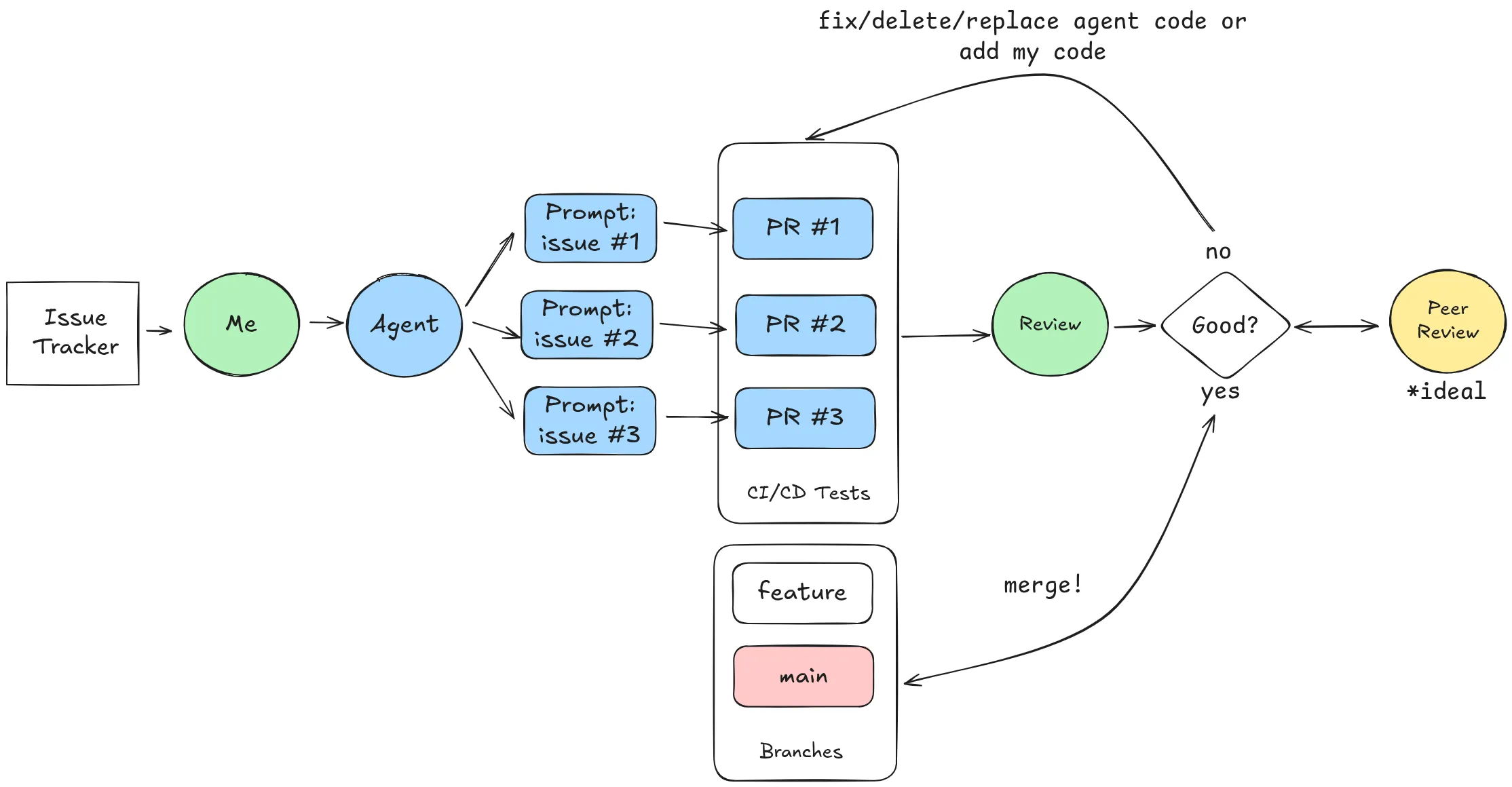

The image shows how I have tried to come as close to a job scenario when coding, starting with an issue tracker. Mine is Notion:

- Like any project. I create issues… fairly vague or extremely detailed and not technical.

- I then write a promp that I give to the Agent to implement a feature/fix from my issue.

- The agent creates a Github Pull Request. or I could also just upload to Github the resulting code from an AI or something similar.

- I review the PR:

- If I liked it. Merge!

- If I didn’t like it I modify it myself and commit the change.

- or… if I didn’t like it I write a new prompt for a new commit from the agent.

This is what I have learned so far

Positive

- I’m F A S T. At what? Creating more and more folders in my graveyard directory of projects. I am really fast.

- No, seriously, I am faster at: Mockups, prototypes, proof of concepts and project validation. I have been deploying small projects in matter of 2~3 hours.

- Testing code: Unit testing is pretty much covered, depending on the agent you are using of course. I had focused only in a couple of edge cases after reading the first tests written by the Agent which have given me certain level of confidence that the basics are pretty much in check.

- Testing live: Once my project is deployed using one of my domains, production testing is really easy. Finding errors in the environment that is supposed to

be live and productive so quick, has been really important for me. Let it be:

- CORS.

- blocked/unreachable resource.

- the plaform is not as fast as I thought it would be once the production build is deployed.

- I misconfigured the prod CDN…

- Learning new languages. I learned Go just by using AI.

- Documentation. I got better at documentation. From stores, to PR descriptions, to code comments. I just need to write down everything to keep up the pace with the code changes, finding my notes, the TODO/FIXME/NOTE comments became life savers. I realised that in PRs there will be always a +1 in the list of participants in the form of what I told the agent to code, so I need to make sure that we all understand what is going on.

- I am improving as a prompt engineer…

- Coding concurrency. This is the big learning of my experience. Read below!

not bad, but… challenging

-

Conversation context. If there is an error that I was trying to solve and I don’t “reset” or start a new conversation. The agent will get stuck or wandering about previous errors that may be already fixed, or if they aren’t, I have not found a way to make them discard the previous approach and try something new. My prompting skills are tested in this situations. Definitely need to work on this.

-

Reading so much code. Depending of the agent you may be using and the task you are trying to implement, you will end up reading a lot of source code. I have been reading code in languages that I know and specially in languages that I dont know and it is challenging to absorbe the output of the agent in the pull request. Solution? I started asking the agents to generate Markdown files for every Pull Request they add and comments in critical implementations. That serves as a great starting point. The 2 lines 1000 comments versus the 1000 lines 1 comment pull requests days are over folks…

-

Frameworks, libraries and integrations. If the agent doesn’t know it, you have to know how to best teach it to get the best outcome.

-

Idiomatic code. I haven’t formed an opinion about it, but from the Ruby/Rails world. Purism about the syntax and linter could pose a HUGE challenge for a team of people using agents that don’t have the context of the company’s guidelines.

Coding Concurrency™

Once I submit the prompt with the task that I want the agent to accomplish, there is some wait… the agent should take its time and figure out the how. When I started with this coding with agents sessions, I was using the Pomodoro technique (25 minutes coding, 5 minutes break). I found myself during the 25 minutes that I was supposed to be coding, just waiting. I was waiting for the agent to do what I wanted and at that moment I didn’t know how much time it was going to take for the first need of my input or simply to finish the task. So? Coding Concurrency™ enters the session.

I started working in several projects concurrently. Every time an agent is thinking in a accomplishing a task I am either:

- giving it another task in the same project for a different branch.

- creating another task in another project.

and I kept going until I realised that it was not substainable to work on more than 3 projects at the same time or even creating more than 3 tasks in the same project:

- if more than 2 projects. Keeping the context and following the coding flow became really hard for me. I just couldn’t keep up.

- if more than 3 tasks per project. There is a high chance of not being able to merge the 3 branches right away, unless the tasks are completely unrelated.

- I couldn’t do more than 6-7 Poms (~3 hours). I was just exhausted. It was really hard to keep my thoughts organised and even thiking on what to do next.

Let me know what you think…

me[at]vanhalt[dot]com

disclaimer: I didn’t use AI to grammar proof this post. English is not my native language. I am sorry about your eyes!